In today's fast-paced digital landscape, maintaining the consistency and security of…

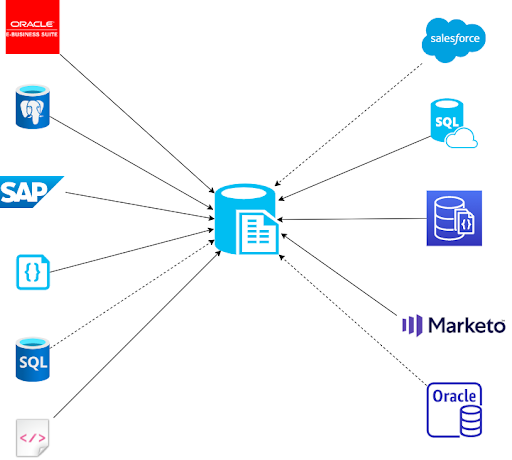

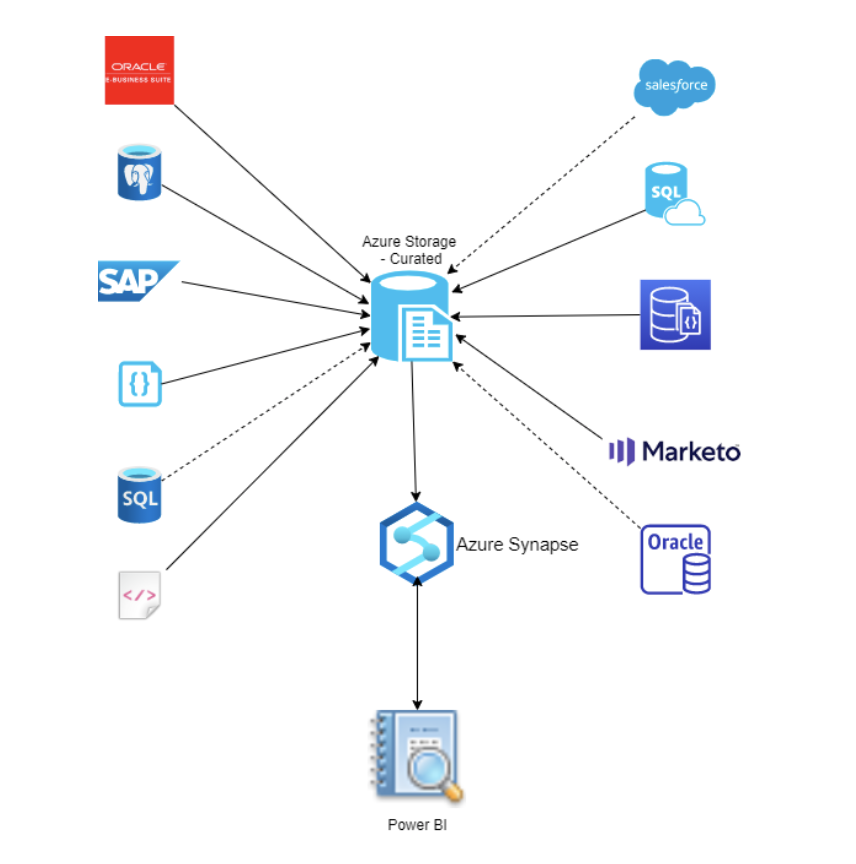

Is your data scattered amongst cloud providers, on-premise systems, databases, file shares, and SaaS applications? Do you need to connect these systems to automate and streamline your business processes? Perhaps you see the need to unify your data to extract valuable insights as my colleague Madison talks about here. Many organizations recognize the incredible value in bringing their systems and data together but struggle to execute for lack of strategic vision, inadequate skill sets, or just not knowing where to begin.

A study by Forbes Insights found that only 13% of companies are “leaders” in leveraging data, and 89% of executives see a moderate to high risk of digital disruption likely to occur in the next three years. While using data for business insights takes work, Zirous has experience working with companies of all shapes and sizes to set you up for success. Let’s dive into the key things you should consider when beginning a data initiative:

- Roadmap

- Execution

- Optimize Service Capabilities

Roadmap

Starting a new project to unify your systems and data can be overwhelming so it’s important to create a clearly defined plan with key objectives to stay on track. The following questions can be a good starting place:

- What systems are currently siloed that would benefit from being tightly integrated?

- What insights have the potential to transform your business?

- Where does the data necessary to drive those integrations and insights exist today?

- Which analytic tools is your staff comfortable with?

Execution

The Azure Data Factory (ADF) is an excellent service that Microsoft provides that can help to untangle your data and bring it together in one place. It is a cloud-based no-code ETL tool that can connect to all your data in any location and any format. ADF provides powerful capabilities to transform your data into a unified model and connect to other systems or populate data marts that your favorite analytic tools can access to produce actionable insights.

Define Canonical Model

An important step on the journey to unifying your data is to define a canonical model. A canonical model creates a common language for your systems to communicate with each other. This enables connecting new systems quickly and without impacting existing integrations. It also builds a buffer if the underlying systems change their data models. Once established, it provides clear steps about the transformations each data source must go through to get to the desired state.

Connect and Ingest

One of the first things you need to do when setting up ADF is to build linked services to each of your systems. There are more than 90 connectors available out of the box that should satisfy most scenarios (list here). The configuration is as simple as providing a few connection details. If the system does not already have connectivity to your Azure environment then a new integration runtime can be configured that can access the system(s) and Azure. Once the linked services have been created then datasets and pipelines can be created to pull the raw data into Azure storage.

Transform and Enrich

The next step is to transform and enrich the raw data for each system so that it adheres to the canonical model. This can be accomplished with ADF pipelines and data flows. Pipelines orchestration activities to accomplish tasks and objectives. This includes moving data, enriching data (e.g. from other data sources or APIs), and invoking data flow transformations. Azure provides many templates for common use cases which allows you to quickly create and configure pipelines (e.g. copying and moving data). A full list can be found here. Pipelines can be trigered on-demand, scheduled, or based on an event.

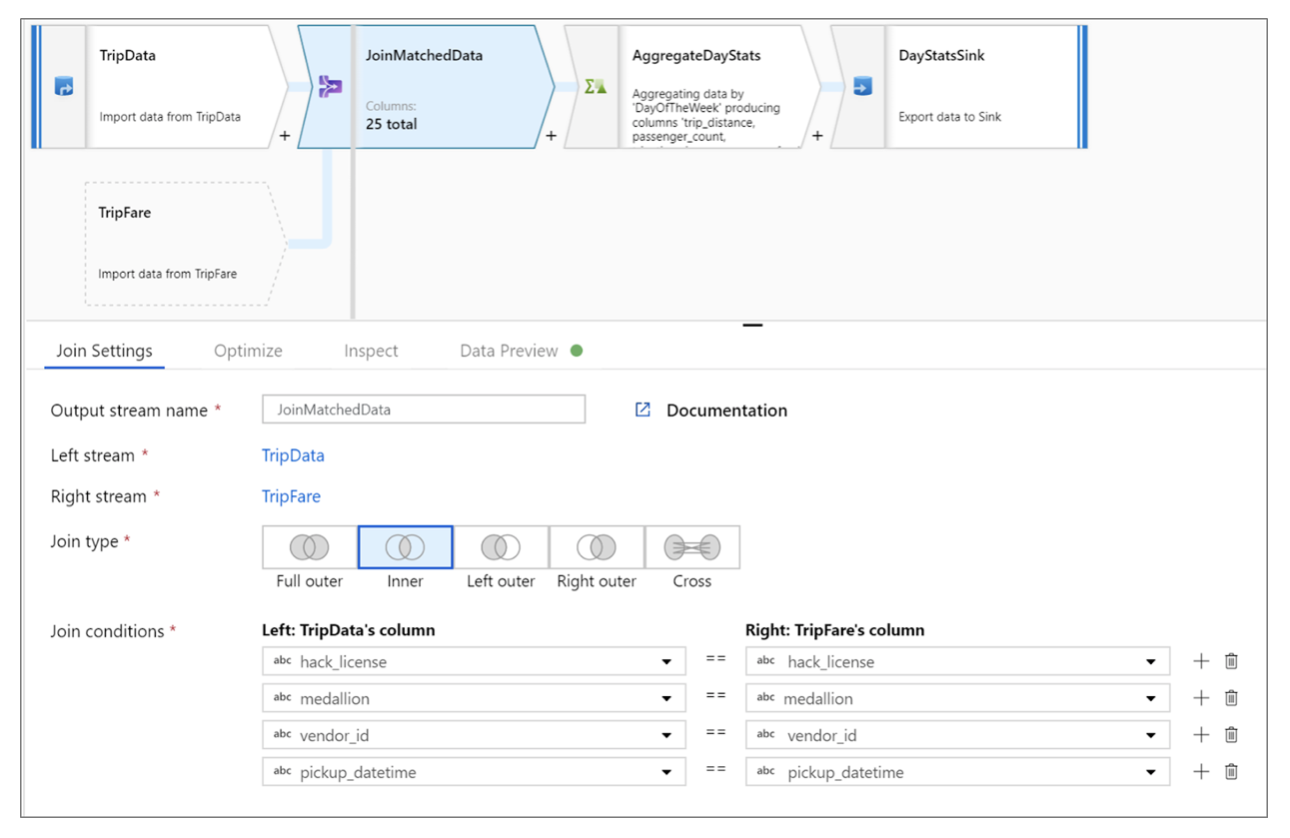

Data flows are based on the Databricks platform, which provides the ability to process huge quantities of data. ADF has developed a visual editor that sits on top of it and eliminates the need to write the code that was traditionally needed (e.g. Python, R, Scala). As a result, you can have the power and speed of Databricks without the technical expertise. They provide many capabilities including joins, aggregation, and filtering. Another powerful feature is the debug mode which allows you to analyze the data at each step. This helps to give visibility to exactly what’s happening and to iron out any wrinkles with the transformation logic. Finally, once the data has been molded into the desired state it can be saved to a curated location in Azure storage.

Data Marts

Once your data has been curated then you’re ready to populate your data marts. Since data marts are a subset of a traditional data warehouse, you can focus on bringing in the relevant information you need for your defined business objectives. This can greatly reduce the amount of time needed to complete your implementation and should have better performance when using your BI tools since the dataset is smaller. Generally, it’s recommended to leverage Azure Synapse as your data store but other options are available depending on your organization’s needs.

Extract Insights

Now that our data marts have been populated we’re finally ready to connect our analytic tools (e.g. PowerBI, Tableau, Qlik). This enables our business analytics to interact with our unified data and extract valuable insights!

Optimize Service Capabilities

We’ve seen how powerful Azure Data Factory can be used to integrate systems and data across your organization when done so correctly. If you’re looking for a unified view of your data that can power transformational insights, need a team of skills technologists, or lack a defined strategy – bring a partner on board. Zirous has 35 years of experience in the ever-changing IT landscape and 400+ years of consulting experience. We want to set you up for long-term success, contact us so we can accomplish your goals together.

This Post Has 0 Comments